Member-only story

Why Gemini 2.0 Flash is Killing RAG in 2025

Someone finally said it out loud in early 2025 — the battle is on. 🚀

Google just dropped GEMINI 2.0 Flash, and guess what? They launched it on my birthday — as an AI enthusiast, what better gift could I ask for? 🎉

But this isn’t just another incremental AI upgrade. This is a statement.

The more I dig into Gemini 2.0 Flash, the more I realize — it’s an absolute killer. Not quite The Flash from Justice League, but trust me, this thing is fast enough to put a serious dent in OpenAI and leave DeepSeek scrambling for relevance.

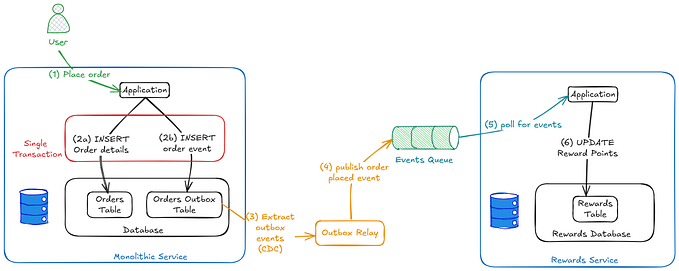

For years, Retrieval-Augmented Generation (RAG) has been the backbone of AI-powered search and reasoning, helping large language models (LLMs) fetch external knowledge before generating responses. But with Gemini 2.0 Flash, Google is rewriting the rules.

This model isn’t just about retrieving data — it’s about processing it natively, reasoning faster, and reducing dependency on external lookups. If you think GPT-4 Turbo or Claude were fast, wait until you see this.

Still confused? 🤔 Don’t worry, I’ve got your back.

I’ll be your wingman in this AI battle. But before we dive into the how and why, we need to break down a few key concepts to truly understand why Gemini 2.0 Flash is changing the game.